Silicon Vox: Accelerated Speech Recognition¶

Silicon Vox accelerates the conversion from speech-to-text for a variety of recognition applications, such as automated transcription or speech analytics.

Silicon Vox provides a range of functionality, from basic decoding of input audio to scanning audio streams in real-time for specific phrases to exploring alternatives in recognized results.

The Silicon Vox API provides a simple, easy-to-use interface to the SV Speech Recognition server.

Overview¶

The Silicon Vox API enables easy, high-level integration with the Silicon Vox accelerated speech recognition system. The basic flow is as follows:

Audio input, an audio file or stream, is described and sent to the speech recognition server. Silicon Vox can recognize real-time audio streams or recorded audio files.

The speech recognition server decodes the Stream.

Decoded results can be stored, scanned, or passed to a user callback. Recognition results for the decoded Streams can be collected by SpeechData objects with various methods for perusing the data.

Recognizing Words: Stream, Server.decodestreams¶

The Silicon Vox speech recognition server decodes a Stream. A Stream describes an audio input source (either a file or a TCP socket address) and reflects the status of the decoding.

When decoding audio, words are matched with the Silicon Vox vocabulary. Each word is given a confidence score. For example, if the audio is Tahoe, which is out of vocabulary, then the decoding might be taco, the closest match in the vocabulary.

The word taco is assigned a confidence score. Other possible words are also stored in the Lattice for this Utterance, such as toe, touch, and Ohio. These possibilities are assigned lower confidence scores.

Storing Results: Utterance and SpeechData¶

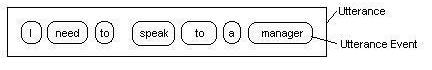

Silicon Vox segments spoken audio into utterances based on the level and duration of voice activity. The decoded audio for the utterance is stored in Utterance objects Utterances are collected in a SpeechData object for the decoded Stream.

An Utterance object contains a time-ordered list of the decoded events (words) as well as the Lattice of word possibilities explored by the decoder. Each UtteranceEvent contains a word and its location, noted by its start and end times in the audio stream.

Other Word Possibilities: Lattice¶

A Lattice is the matrix of speech possibilities from a decoded source. A Lattice contains all the data decoded by the server for an Utterance.

A Lattice has two dimensions: the time division for the audio stream, and the possible words found at each time division, stored as a list of ordered LatticeEntry objects.

Each word in the Lattice has a confidence, indicating how sure the system is that the word is correct. For example, audio recorded in a noisy environment could lower the confidence.

A Lattice enables you to explore forks where various word possibilities have similar confidence scores.

Finding Words: Scanners¶

Silicon Vox provides Scanners to find a specified word or phrase in the input Stream, or decoded results from a SpeechData object. If the target phrase is detected, the Scanner calls the callback provided.

The callback is the function to be called when matches occur. Callbacks contain the target phrase and the Utterance matching the phrase.

Timestamps¶

For real-time streams, audio timestamping is done using the datetime when the audio data was received by the server. Thus to ensure accurate timestamps when using streams, the speech recognition server must be synced with a time server.

For audio files, the beginning of the file is timestamped as 00:00:00 with date set to 01/01/0001.